Instructions on Transformer for people outside NLP field, but with examples of NLP

I found it quite difficult to explain mathematical details of long short-term memory (LSTM) in my previous article series. But when I was studying LSTM, a new promising algorithm was already attracting attentions. The algorithm is named Transformer. Its algorithm was a first announced in a paper named “Attention Is All You Need,” and it outperformed conventional translation algorithms with lower computational costs.

In this article series, I am going to provide explanations on minimum prerequisites for understanding deep learning in NLP (natural language process) tasks, but NLP is not the main focus of this article series, and actually I do not study in NLP field. I think Transformer is going to be a new major model of deep learning as well as CNN or RNN, and the model is now being applied in various fields.

Even though Transformer is going to be a very general deep learning model, I still believe it would be an effective way to understand Transformer with some NLP because language is a good topic we have in common. Unlike my previous article series, in which I tried to explain theoretical side of RNN as precisely as possible, in this article I am going to focus on practical stuff with my toy implementations of NLP tasks, largely based on Tensorflow official tutorial. But still I will do my best to make it as straightforward as possible to understand the architecture of Transformer with various original figures.

This series is going to be composed of the articles below.

- On the difficulty of language: prerequisites for NLP with Transformer

- Seq2seq model and attention mechanism: a backbone of NLP with deep learning

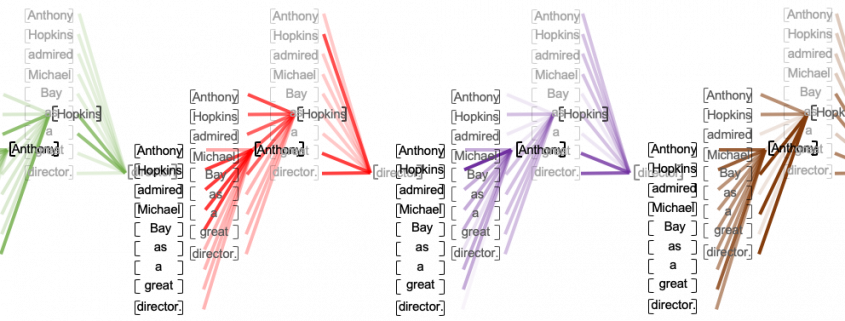

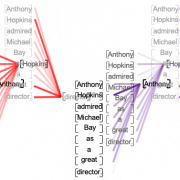

- Multi-head attention: the key component of Transformer

- Positional encoding, residual connections, padding masks: covering the rest of Transformer components

- How to make a toy English-German translator with multi-head attention heat maps: the overall architecture of Transformer

- Transformer in image processing (Coming soon)

If you are in the field and can read the codes in the official tutorial with no questions, this article series is not for you, but if you want to see how a Transformer works but do not want to go too much into details of NLP, this article would be for you.

Leave a Reply

Want to join the discussion?Feel free to contribute!