A comprehensive guide compiled to introduce readers to AI platforms, their types, and benefits. A concluding section to discuss AI platform selection strategy with Attri’s Best of Breed approach to build AI platforms.

Don’t you think that this century is really fortunate? In my opinion, the answer is yes; we witnessed technological transformations and their miracles that created substantial changes in our lifestyle. While talking about these life-changing technological revolutions, AI or artificial intelligence deserves a front seat due to its incredible contribution and capabilities. Now everyone knows AI has limitless potential simply from creating funny faces in mobile to taking informed and intelligent business decisions. In the last 50 years, we have progressed by leaps and bounds to give machines the ability to understand, help and mimic us.

Artificial intelligence enables machines to imitate human intelligence across a variety of domains ranging from problem-solving and reasoning to General Intelligence and in-depth knowledge representation. With tremendous progress in AI, another enabler came into existence and received attention—AI platforms. AI-platform is a layer that integrates all the tools and processes required to build, deploy and monitor ML models. In this article, we shall go through the various aspects of AI platforms covering a range of topics like AI Platform types, the benefits such platforms entail, selection strategy in detail as well as a brief look into Attri’s industry contribution with an Open AI Platform.

Diving Deeper With AI Platforms

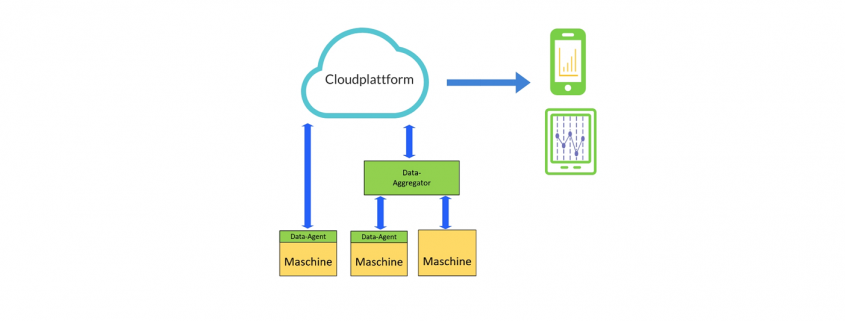

The AI Platform acts as a layer over your current AI infrastructure and integrates all the tools and processes required to develop ML models. It provides you the flexibility to integrate all your ML models under a single roof. With this flexibility, you can create and deploy several ML models over the platform. Further, you can even monitor these models to confirm that they are serving their intended purpose. AI platform makes your AI adoption easy by attaining the following requirements–

- Use of vast data to develop ML solutions.

- Ensure transparency and reproducibility within a project

- Accelerate collaboration and governance within teams

- Ensure scalability for ever-growing machine learning demands

An ideal AI platform should ensure the following features for better addressing different challenges.

- Seamless access control: Ensure robust access control to team members in order to conquer the challenge of centralized data access with AI projects.

- Excellent monitoring: Integrate top-notch observability practices while developing ML models.

- Data and technology-agnostic integration: Seamless experience to enterprises with infrastructure set up responsibility handed over to platform providers

- All-inclusive Platform: Single platform to facilitate all underlying tasks from data preparation to model deployment

- Continuous Improvement: Ability to produce and deploy models as a reproducible package and thereby integrate changes with models that are already in production

- Rapid Processing: Faster data preparation and powerful visual interfaces

AI Platform Classification

With loads of AI platform providers available in the market, AI platform classification becomes a tough job, as it requires thinking separately on each platform’s offerings, its features, and cost factors. Also, you need to check whether AI solutions are open source AI platforms or proprietary offerings.

We have decided to present an AI platform classification based on its striking features and offerings. With this, we have classified AI platforms across three main classes—

- AI cloud-based platforms

- AI conversational platforms

- No code AI platforms

All major cloud providers offer cloud-based AI platforms to boost businesses with AI capabilities. With cloud AI platforms, enterprises can leverage cloud providers’ matchless technical expertise to overcome affordability and data requirement challenges associated with AI implementation. Cloud-based AI offerings benefit businesses with economic AI solutions, defined and pre-packaged services, lower risks, and modern technology.

Amazon Web Services

AWS offers a comprehensive set of AI solutions to conquer major hurdles in the AI adoption journey of businesses. AWS has been recognized as the topmost cloud AI partner with its broad capable portfolio. AWS pre-trained models cater to diverse use cases like forecasting, recommendations, computer vision, language interpretation, customer engagement, and safety for deploying ML models at scale. Amazon also provides text analytics, NLP, chatbots, and document analysis solutions. Fully managed AWS packages amplify your experience with minimum resource requirements and wizard-based friendly model development experience. Hence, AWS is one of the top cloud AI partners that cater to your AI adoption needs.

Google cloud

The Google Cloud Platform (GCP) is a Google offering for cloud-driven computing services devised to support multiple use cases such as hosting containerized applications, massive-scale data analytics platforms, and even applying ML and AI for business use cases. Google AI Platform is a Google Cloud offering that helps build, deploy and manage machine learning models in the cloud.

Google leverages enterprise AI experience through its consumer-facing products. Google helps improve customer satisfaction through Contact Center AI. Google offering DialogFlow CX is used to create advanced chatbots that handle customer messaging, response, and voice recognition. Digiflow is applied to create virtual agents for messaging services, mobile apps, and IoT devices.

Google’s Cloud Vision API is beneficial to recognize objects, logos, and landmarks within content or images. Google provides Natural Language API to bring more clarity in content classification, entities, syntax, and sentiments. Further, Google speech API helps in converting audio to text and recognizing 110 languages.

Google’s Cloud ML services facilitate better decision-making with end-to-end ML solutions. Google offers an all-inclusive ML development platform that enables effective decision-making backed by explainable AI, continuous evaluation, data labeling, pipelines, training, and what-if tool. This platform is based on the TensorFlow framework and it enables building predictive models for various scenarios.

Kubeflow is a Cloud-Native and open-source platform that helps you build portable ML pipelines that can be executed on-premises or on the cloud. With this, you can access Google technologies like TPUs, TensorFlow, and TFX tools as you deploy your ML models in production.

For expert ML developers, Google provides an Open Source AI platform with TensorFlow models that are trained for various scenarios. It offers an excellent prediction service using trained models.

Microsoft Azure

Similar to Amazon Web Services, Microsoft Azure ML capabilities are based on its real-time and live applications. Azure provides superior machine learning capabilities to develop, train, and deploy machine learning models through Azure Machine Learning, Azure Databricks, and ONNX.

A Python-based ML service to facilitate automated machine learning.

An open-source model format enables machine learning through various frameworks and hardware platforms of the user’s choice.

Formerly known as Azure Search,this is the only cloud search service that allows built-in AI capabilities to explore content effectively at scale. Microsoft empowers the user with cognitive search services like text analytics, translation, document analytics, custom vision, and Azure Machine Learning solutions.

IBM Cloud

IBM has brought Watson studio a data analysis application to accelerate innovation and ML-centric practices in business. IBM Cloud AI Platform offers 170 services with more emphasis on data-speech conversions and analytics. Watson Studio offers an all-inclusive suite to work with data and train, build and deploy ML models.

An innovative giant IBM also brought AI based learning platform recently to aid academic stakeholder like students, researchers and teachers.

AI Conversational Platforms

Conversational AI opens new doors for automated conversations between an enterprise and its customers. These conversations include messaging or voice-based communication platforms to enable text or audio-based conversation.

Conversational platforms leverage your customer experience with a range of applications such as follow-up, guidance, or the resolution of customer queries and round-the-clock support. These platforms are beneficial to drive more leads, increase conversions by cross-selling and upselling, promotional efforts, customer research, queries resolution and customer feedback handling, etc.

AI technology helps systems to mimic human conversations to a certain level and with great accuracy. An AI offering- Natural Language processing is used to shape these conversations by understanding intent, text, speech, and languages.

Intelligent Virtual Assistants

The intelligent virtual assistants represent an advanced level of Conversational AI and their discussion is incomplete without a mention to Siri and Alexa. Most popular intelligent virtual assistants include Siri by Apple, Alexa by Amazon, Google Assistant, and Bixby by Samsung. While Alexa performs as a voice assistant for the home, Siri and Bixby stand as mobile assistants with numerous operations support like navigation, text-to-speech, response to weather, quick reply, and address search.

SAP Conversational AI

SAP Conversational AI is one of the leading conversational AI platforms. With its friendly UI and multiple versioning, it offers a better experience of mimicking human conversations. SAP Conversational AI Platform uses NLP to facilitate developing chatbot that works more humanely and serves your customers 24*7. Its striking features include—

- Simple integration

- NLP capabilities

- Analytics tools to help you

- Multi-language support

Clinc

A powerful self-learning Conversational AI Platform enriched with NLP capabilities and machine learning. It secures top position in the Conversational AI Platform list due to its learning from previous conversations and improving responses over time. Its feature set include—

- No technical expertise required

- Self-learning abilities

- NLP capabilities

Kore.ai

An enterprise-grade Conversational AI Platform to cater to your consumer as well as staff needs. It helps to build a virtual chatbot for any suitable platform without compromising the safety and security standards. Its major features cover—

- The high degree of customization for chatbots

- Comprehensive analytics with FAQs and alerts

- Simple integration with ML models and channels

- Flexible deployment

- Supported with a multi-pronged NLP engine

Mindmeld

It is an excellent option as a Deep-Domain Conversational AI Platform with NLP capabilities. It can be used for both text-based and voice-based virtual assistants. This platform effectively caters to multiple industries and their numerous use cases. Check its striking features list—

- Open-source platform

- NLP capabilities

- Supports discovering on-demand video or music

- Quick chat-based transactions

No Code AI Platforms

As discussed above, AI platform classification necessitates platform considerations from various perspectives. We are introducing another category of AI platforms—No Code AI Platforms. The motivation behind introducing these platforms is to encourage enterprise AI adoption while keeping AI implementation costs low and minimizing dependencies on skilled professionals. Many IT giants are now offering no-code AI Platforms to enterprises for their AI adoption.

Google ML Kit

Google ML Kit comes with Android and iOS and it facilitates the integration of functions with lesser codes or with minimum knowledge of machine learning algorithms. This open source AI Platform supports different features such as text recognition, face detection, and landmark recognition.

RapidMiner Studio

RapidMiner Studio enables powerful data analytics with drag and drop features. Rapidminer Studio allows easy integration with databases, warehouses, social media for easy data access by authorized persons.

ML Platform Selection Strategy

Having discussed so many types of ML platforms, their features, and offerings, the next question is–how to select the best ML Platform for an enterprise AI adoption. Well, to answer this Million-Dollar question, we need to consider a few key aspects, such as

- Who will use and benefit from the AI Platform? It is required to find out AI platform users here, the data science team, analytics team, developers, and how the platform will benefit each stakeholder.

- The next aspect is to explore the skill levels of AI platform users, are they competent to handle ML development and analytics requirements with years of experience

- Proficiency of users with programming languages

- The next point in finalizing the AI platform strategy is to conclude code-first or code-free approaches to streamline AI workflows. This aspect can be studied by thinking about different attributes such as data preparation ease, feature engineering automation, ML algorithms, Model Deployment ease, and platform integration aspects.

Once you come up with answers to these queries, you will be able to finalize the best AI Platform Selection strategy for your enterprise. It can be a unique cloud platform, or even it can be a hybrid solution with a “best-of-breed” approach.

All-in-one platform strategy involves getting one end-to-end platform for the entire AI project lifecycle from raw data prep to ETL to building and operationalizing models followed by monitoring and governance of systems.

The best-of-breed approach allows using the preferred and custom tools for each phase of the lifecycle and aligning these tools together to build a customized platform solution for AI adoption.

This approach offers an excellent AI platform solution for organizations looking for flexible, inexpensive, change-oriented AI solutions and having a DIY spirit. With this mix-and-match approach, you can combine APIs offered by different cloud platforms and deliver AI solutions that cater to your AI use cases. Organizations using the best-of-breed approach are more comfortable with technology shifts with their abilities to use, adopt and swap out tools as requirement changes.

Business Process AI Transformation Simplified With Attri’s Open AI Platform

At Attri, we provide AI platform solutions to diverse industry verticals. With our flagship Open AI Platform, we heighten your AI adoption experience with a rich array of platform features like—

- Customizable best-of-breed architecture

- Utilize existing infrastructure

- AI as a platform solution

- Reduced effort in migrating to a new technology

- Centralized Monitoring and Governance

- Explainable and Responsible AI

We help you achieve your business process transformation goals with our unique AI offerings such as Open AI Platform and Open AI solutions.

Our AI platform assures multiple benefits to your enterprise while keeping AI adoptions costs low and ensuring faster AI implementations. We can summarize the benefits of Attri Open AI Platform as under–

No efforts in reinventing complete AI suites

Attri’s AI Platform integrates multiple AI services and eliminates the need for reinventing complete AI suites. The platform delights enterprises with scalability, the ability to reuse current infrastructure, and customizable architecture.

Accelerated Go To Market

Attri’s Open AI Platform ensures accelerated GTM with a sincere approach to testing, reviewing, and finalizing reference templates for different industries.

No vendor lock-in

With Open AI Platform, we bring client-friendly policies such as no vendor lock-in and flexibility to choose their preferred tools and technology.

High reliability

We keep our AI Platform highly reliable with a comprehensive testing approach. We also meet the growing requirements of enterprises by ensuring high scalability with our open AI platform.

Get connected with us for your enterprise AI adoption requirements.

Know more about our Open AI Platform…