Illustrative introductions on dimension reduction

“What is your image on dimensions?”

….That might be a cheesy question to ask to reader of Data Science Blog, but most people, with no scientific background, would answer “One dimension is a line, and two dimension is a plain, and we live in three-dimensional world.” After that if you ask “How about the fourth dimension?” many people would answer “Time?”

You can find books or writings about dimensions in various field. And you can use the word “dimension” in normal conversations, in many contexts.

*In Japanese, if you say “He likes two dimension.” that means he prefers anime characters to real women, as is often the case with Japanese computer science students.

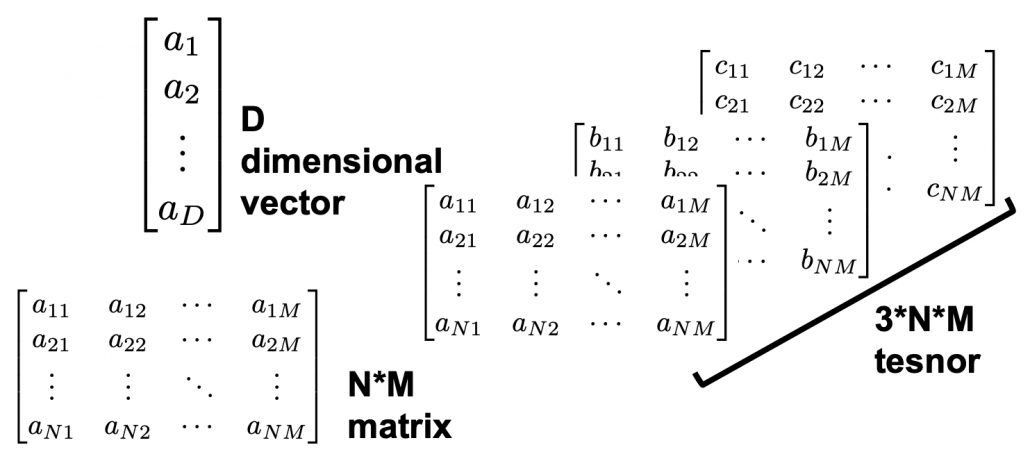

The meanings of “dimensions” depend on the context, but in data science dimension is usually the number of rows of your Excel data.

When you study data science or machine learning, usually you should start with understanding the algorithms with 2 or 3 dimensional data, and you can apply those ideas to any D dimensional data. But of course you cannot visualize D dimensional data anymore, and you always have to be careful of what happens if you expand degree of dimension.

Conversely it is also important to reduce dimension to understand abstract high dimensional stuff in 2 or 3 dimensional space, which are close to our everyday sense. That means dimension reduction is one powerful way of data visualization.

In this blog series I am going to explain meanings of dimension itself in machine learning context and algorithms for dimension reductions, such as PCA, LDA, and t-SNE, with 2 or 3 dimensional visible data. Along with that, I am going to delve into the meaning of calculations so that you can understand them in more like everyday-life sense.

This article series is going to be roughly divided into the contents below.

- Curse of Dimensionality

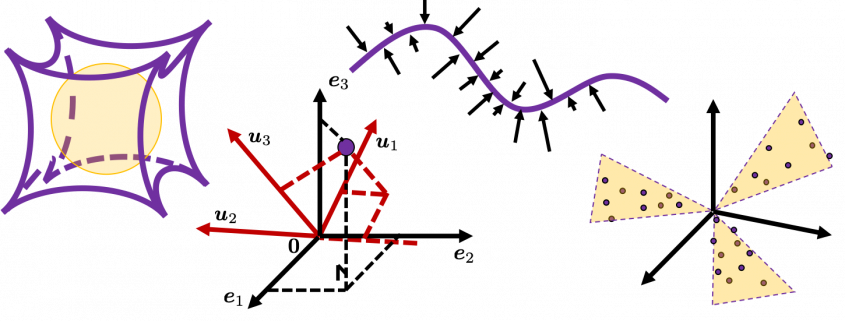

- Rethinking linear algebra: visualizing linear transformations and eigen vector

- The algorithm known as PCA and my taxonomy of linear dimension reductions

- Rethinking linear algebra part two: ellipsoids in data science

- Autoencoder as dimension reduction (to be published soon)

- t-SNE (to be published soon)

I hope you could see that reducing dimension is one of the fundamental approaches in data science or machine learning.

Number of rows or number of columns of Excel data