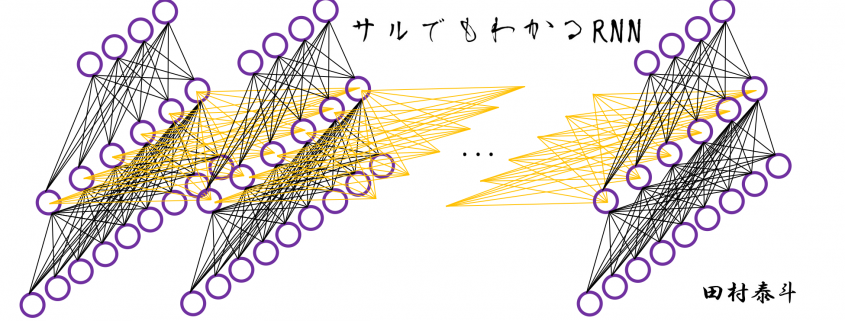

A gentle introduction to the tiresome part of understanding RNN

Just as a normal conversation in a random pub or bar in Berlin, people often ask me “Which language do you use?” I always answer “LaTeX and PowerPoint.”

I have been doing an internship at DATANOMIQ and trying to make straightforward but precise study materials on deep learning. I myself started learning machine learning in April of 2019, and I have been self-studying during this one-year-vacation of mine in Berlin.

Many study materials give good explanations on densely connected layers or convolutional neural networks (CNNs). But when it comes to back propagation of CNN and recurrent neural networks (RNNs), I think there’s much room for improvement to make the topic understandable to learners.

Many study materials avoid the points I want to understand, and that was as frustrating to me as listening to answers to questions in the Japanese Diet, or listening to speeches from the current Japanese minister of the environment. With the slightest common sense, you would always get the feeling “How?” after reading an RNN chapter in any book.

This blog series focuses on the introductory level of recurrent neural networks. By “introductory”, I mean prerequisites for a better and more mathematical understanding of RNN algorithms.

I am going to keep these posts as visual as possible, avoiding equations, but I am also going to attach some links to check more precise mathematical explanations.

This blog series is composed of five contents.:

- Prerequisites for understanding RNN at a more mathematical level

- Simple RNN: the first foothold for understanding LSTM

- A brief history of neural nets: everything you should know before learning LSTM

- Understanding LSTM forward propagation in two ways

- LSTM back propagation: following the flows of variables

Trackbacks & Pingbacks

[…] *This article is the second article of “A gentle introduction to the tiresome part of understanding RNN.” […]

[…] *This article is the second article of “A gentle introduction to the tiresome part of understanding RNN.” […]

[…] the A gentle introduction to the tiresome part of understanding RNN Article Series on recurrent neural network (RNN) is nothing like a creative or ingenious idea. It is quite an […]

[…] the A gentle introduction to the tiresome part of understanding RNN Article Series on recurrent neural network (RNN) is nothing like a creative or ingenious idea. It is quite an […]

Leave a Reply

Want to join the discussion?Feel free to contribute!