How the Pandemic is Changing the Data Analytics Outsourcing Industry

While media pundits have largely focused on the impact of COVID-19 as far as human health is concerned, it hasn’t been particularly good for the health of automated systems either. As cybersecurity budgets plummet in the face of dwindling finances, computer criminals have taken the opportunity to increase attacks against high value targets.

In June, an online antique store suffered a data breach that contained over 3 million records, and it’s likely that a number of similar attacks have simply gone unpublished. Fortunately, data scientists are hard at work developing new methods of fighting back against these kinds of breaches. Budget constraints and a lack of personnel as a result of the pandemic continues to be a problem, but automation has helped to assuage the issue to some degree.

AI-Driven Data Storage Systems

Big data experts have long promoted the cloud as an ideal metaphor for the way that data is stored remotely, but as a result few people today consider the physical locations that this information is stored at. All data has to be located on some sort of physical storage device. Even so-called serverless apps have to be distributed from a server unless they’re fully deployed using P2P services.

Since software can never truly replace hardware, researchers are looking at refining the various abstraction layers that exist between servers and the clients who access them. Data warehousing software has enabled computer scientists to construct centralized data storage solutions that look like traditional disk locations. This gives users the ability to securely interact with resources that are encrypted automatically.

Background services based on artificial intelligence monitor virtual data warehouse locations, which gives specialists the freedom to conduct whatever analytics they deem necessary. In some cases, a data warehouse can even anonymize information as it’s stored, which can streamline workflows involved with the analysis process.

While this level of automation has proven useful, it’s still subject to some of the problems that have occurred as a result of the pandemic. Traditional supply chains are in shambles and a large percentage of technical workers are now telecommuting. If there’s a problem with any existing big data plans, then there’s often nobody around to do any work in person.

Living with Shifting Digital Priorities

Many businesses were in the process of outsourcing their data operations even before the pandemic, and the current situation is speeding this up considerably. Initial industry estimates had projected steady growth numbers for the data analytics sector through 2025. While the current figures might not be quite as bullish, it’s likely that sales of outsourcing contracts will remain high.

That being said, firms are also shifting a large percentage of their IT spending dollars into cybersecurity projects. A recent survey found that 37 percent of business leaders said they were already going to cut their IT department budgets. The same study found that 28 percent of businesses are going to move at least some part of their data analytics programs abroad.

Those companies that can’t find an attractive outsourcing contract might start to patch their remote systems over a virtual private network. Unfortunately, this kind of technology has been strained to some degree in recent months. The virtual servers that power VPNs are flooded with requests, which in turn has brought them down in some instances. Neural networks, which utilize deep learning technology to improve themselves as time goes on, have proven more than capable of predicting when these problems are most likely to arise.

That being said, firms that deploy this kind of technology might find that it still costs more to work with automated technology on-premise compared to simply investing in an outsourcing program that works with these kinds of algorithms at an outside location.

Saving Money in the Time of Corona

Experts from Think Big Analytics pointed out how specialist organizations can deal with a much wider array of technologies than a small business ever could. Since these companies specialize in providing support for other organizations, they have a tendency to offer support for a large number of platforms.

These representatives recently opined that they could provide support for NoSQL, Presto, Apache Spark and several other emerging platforms at the same time. Perhaps most importantly, these organizations can work with Hadoop and other traditional data analysis languages.

Staffers working on data mining operations have long relied on languages like Hadoop and R to write scripts that they later use to automate the process of collecting and analyzing data. By working with an organization that already supports a language that companies rely on, they can avoid the need of changing up their existing operations.

This can help to drastically reduce the cost of migration, which is extremely important since many of the firms that need to migrate to a remote system are already suffering from budget problems. Assuming that some issues related to the pandemic continue to plague businesses for some time, it’s likely that these budget constraints will force IT departments to consider a migration even if they would have otherwise relied solely on a traditional colocation arrangement.

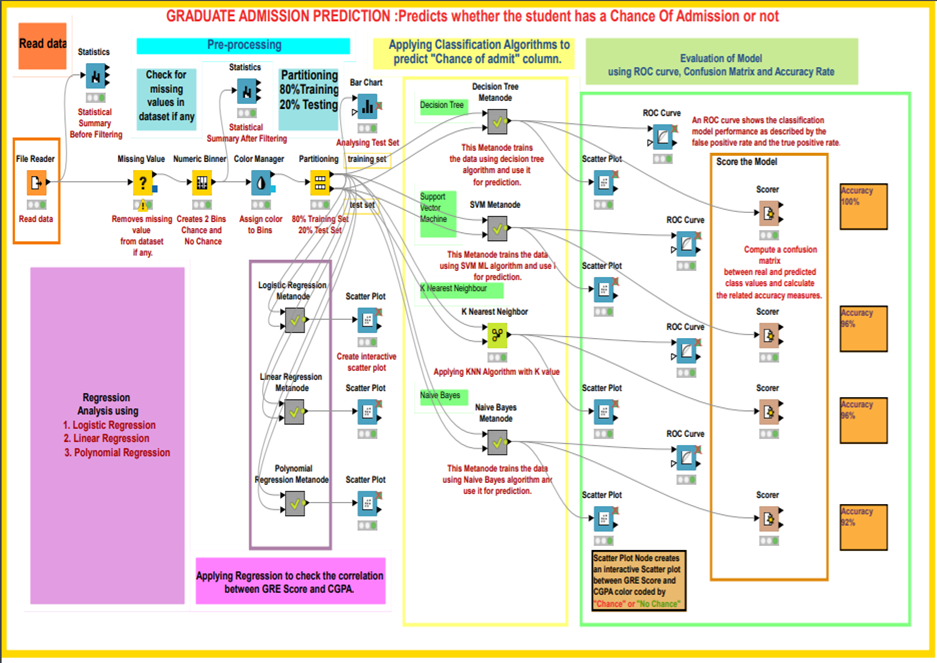

IT department staffers were already moving away from many rare platforms even before the COVID-19 pandemic hit, however, so this shouldn’t be as much of a herculean task as it sounds. For instance, the KNIME Analytics Platform has increased in popularity exponentially since it’s release in 2006. The fact that it supports over 1,000 plug-in modules has made it easy for smaller businesses to move toward the platform.

The road ahead isn’t going to be all that pleasant, however. COBOL and other antiquated languages still rule the roost at many governmental big data processing centers. At the same time, some small businesses have never even been able to put a big data plan into play in the first place. As the pandemic continues to wreak havoc on the world’s economy, however, it’s likely that there will be no shortage of organizations continuing to migrate to more secure third-party platforms backed by outsourcing contracts.