In today’s digital day and age, a software development career is one of the most lucrative ones. Custom software developers abound, offering all sorts of services for business organizations anywhere in the world. Software developers of all kinds, vendors, full-time staff, contract workers, or part-time workers, all are important members of the Information Technology community.

There are different career paths to choose from in the world of software development. Among the most promising ones include a software developer career and a data scientist career. What exactly are these?

Software developers are the brainstorming, creative masterminds behind all kinds of computer programs. Although there may be some that focus on a specific app or program, others build giant networks or underlying systems, which power and trigger other programs. That’s why there are two classifications of a software developer, the app software developer, and the developers of systems software.

On the other hand, data scientists are a new breed of experts in analytical data with the technical skills to resolve complex issues, as well as the curiosity to explore what problems require solving. Data scientists, in any custom software development service, are part trend-spotter, part mathematicians, and part computer scientists. And, since they bestraddle both IT and business worlds, they’re highly in-demand and of course well-paid.

When it comes to the field of custom software development and software development in general, which career is the most promising? Let’s find out.

Data Science and Software Development, the Differences

Although both are extremely technical, and while both have the same sets of skills, there are huge differences in how these skills are applied. Thus, to determine which career path to choose from, let’s compare and find the most critical differences.

The Methodologies

Data Science Methodology

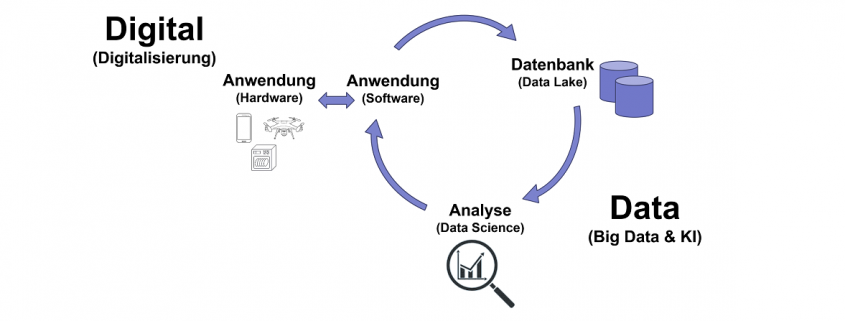

There are different places in which a person could come into the data science pipeline. If they are gathering data, then they probably are called a data engineer, and they would be pulling data from different resources, cleaning and processing it, and storing it in a database. Usually, this is referred to as the ETL process or the extract, transform, and load.

If they use data to create models and perform analysis, probably they’re called a ‘data analyst’ or a ‘machine learning engineer’. The critical aspects of this part of the pipeline are making certain that any models made don’t violate the underlying assumptions, and that they are driving worthwhile insights.

Methodology in Software Development

In contrast, the development of software makes use of the SDLC methodology or the software development life cycle. The workflow or cycle is used in developing and maintaining software. The steps are planning, implementing, testing, documenting, deploying, and maintaining.

Following one of the different SDLC models, in theory, could lead to software that runs at peak efficiency and would boost any future development.

The Approaches

Data science is a very process-oriented field The practitioners consume and analyze sets of data to understand a problem better and come up with a solution. Software development is more of approaching tasks with existing methodologies and frameworks. For example, the Waterfall model is a popular method that maintains every software development life cycle phase that should be completed and reviewed before going to the next.

Some frameworks used in development include the V-shaped model, Agile, and Spiral. Simply, there is no equal data science process, although a lot of data scientists are within one of the approaches as part of the bigger team. Pure developers of the software have a lot of roles to fill outside data science, from front-end development to DevOps and infrastructure roles.

Moreover, although data analytics pays well, the roles of software developers of all kinds are still higher in demand. Thus, if machine learning isn’t your thing, then you could spend your spare time in developing expertise in your area of interest instead.

The Tools

The wheelhouse of a data scientist has data analytics tools, machine learning, data visualization, working with databases, and predictive modeling. If you use plenty of data ingestion and storage they probably would use MongoDB, Amazon S3, PostgreSQL, or something the same. For building a model, there’s a great chance that they would be working with Scikit-learn or Statsmodels.

Big data distributed processing needs Apache Spark. Software engineers use software to design and analyze tools, programming languages, software testing, web apps tools, and so on. With data science, many depend on what you’re attempting to accomplish. For actually creating TextWrangler, code Atom, Emacs, Visual Code Studio, and Vim are popular.

Django by Python, Ruby on Rails, and Flask see plenty of use in the backend web development world. Vue.js emerged recently as one of the best ways of creating lightweight web apps, and similarly for AJAX when creating asynchronous-updating, creating dynamic web content. Everyone must know how to utilize a version control system like GitHub for instance.

The Skills

To become a data scientist, some of the most important things to know include machine learning, programming, data visualization, statistics, and the willingness to learn. Various positions may need more than these skills, but it’s a safe bet to say that these are the bare minimum when you pursue a data science career.

Often, the necessary skills to be a developer of the software will be a little more intangible. The ability of course to program and code in various programming languages is required, but you should also be able to work well in development teams, resolve an issue, adapt to various scenarios, and should be willing to learn. This again isn’t an exhaustive list of skills, but these certainly would serve you well if you are interested in this career.

Conclusion

You should, at the end of the day must choose a career path that’s based on your strengths and interests. The salaries of data scientists and software developers are the same to an average at least. However, before choosing which is better for you, consider experimenting with various projects and interact with different aspects of the business to determine where your skills and personality best fits in since that is where you’ll grow the most in the future.