Table of Contents

1 Introduction

2 Emerging of Data Science

3 Big data technologies

4 Two data worlds: Predictive vs inferential statistics

5 How to study data science

6 Conclusions

7 References

Introduction

As a student of Statistics and the winner of Data Science Scholarship I am often surrounded by computer scientists, mathematicians, physicists and of course statisticians. During conversation, I was asked questions such as “So what actually do I do? What is Data Science?”. These are some very difficult questions and as like you will see during reading this document many before me tried to answer those questions. There is a dispute between statisticians and computer scientists what is the origin of data science and who should teach it. According to the Institute of Mathematical Statistics in the: “The IMS presidential address: let us own data science” we can find a simple recipe for data scientist. [1]

“Putting the traits of Turner and Carver together gives a good portrait of a data scientist:

- Statistics (S)

- Domain/Science knowledge (D)

- Computing (C)

- Collaboration/teamwork (C)

- Communication to outsiders (C)

That is, data science = SDCCC = S DC3

However, despite all the challenges that I will need to overcome in answering those questions I will try to do it. I will refer to ideas from several reputable sources, in which I will also tell you: what is in the data science that I am really fascinated about? What is magical in this creation of statistics and computer science that I am drawn to?

Emerging of Data Science

On Tuesday, the 8th of September 2015, University of Michigan announced the 100 million dollars “Data Science Initiative” (DSI), hired 35 new faculty members. On the DSI website we can read about this initiative:

“This coupling of scientific discovery and practice involves the collection, management, processing, analysis, visualisation, and interpretation of vast amounts of heterogeneous data associated with a diverse array of scientific, translational and interdisciplinary applications”2

But that sounds like a bread and butter for statisticians. So, is it really a new creation or is it something that exists for many years but it didn’t sound so sexy as data science? In the article written by Karl Broman, (the University of Wisconsin) we can read:

“When physicists do mathematics, they’re don’t say they’re doing “number science”. They’re doing math. If you’re analyzing data, you’re doing statistics. You can call it data science or informatics or analytics or whatever, but it ‘s still statistics. If you say that one kind of data analysis is statistics and another kind is not, you’re not allowing innovation. We need to define the field broadly. You may not like what some statisticians do. You may feel they don’t share your values. They may embarrass you. But that shouldn’t lead us to abandon the term “statistics”.

Reading the definition of data science on the Data Science Association’s “Professional Code of Conduct”:

“Data scientist means a professional who uses scientific methods to liberate and create meaning from raw data”

These sound like K. Browman maybe right. Maybe I should go on MSc Statistics like many before me did. Maybe Data Science is simply a new sexy name for statistician only data is big, technology more advanced rather than it used to be so you need to have programming skills to handle the data. Maybe let say loudly data science is a modern version of statistics? But maybe not? Because we can also find statements like the following:

“Statistics is the least important part of data science”. [3]

Further, we can read:

“There ‘s so, much that goes on with data that is about computing, not statistics. I do think it would be fair to consider statistics (which includes sampling, experimental design, and data collection as well as data analysis (which itself includes model building, visualization, and model checking as well as inference)) as a subset of data science. . . .”.[3]

So maybe people from computer science are right. Maybe I should go and study programming and forget about expanding my knowledge in statistics? After all, we all know that computer science always had much bigger funding and having MSc computer science was always like a magic star for employers. What should I do? Let me research further.

Big data technologies

Is the data size important to distinguish between data science and statistics? Going back to the “Let us own data science” article we can read that a statistician, Hollerith, invented the punched card reader to allow e cient compilation of a US census, the first elements of machine learning. So, no, machine learning is not an invention of computer scientists. It was well known for statistician for decades already. What about different techniques used in DOE (Design of Experiments) or sampling methods to decrease the sample size. If the data used by statisticians would be only small they wouldn’t have to discover methods such PCA (Principle component analysis) or dimensionality reduction techniques. So, no, data can be big and/or small for statisticians, so what is the difference between data science and statistics and what department should I choose?

When I spoke to computer scientists they try to convince me to choose computer science department. Their reasons being that there are many different programmes that I need to know to deal with large datasets. For instance: Java, Hadoop, SQL, Python, and much more. Moreover, programming can only be taught to the best standard through computer science courses Is it true? Can’t we do the same calculations using statistical software such as R, SAS or even Matlab? But on the other hand, doesn’t the newest technology always work faster? And if so, wouldn’t be better to use the newest technology when we program and write loops?

But, I don’t want to underestimate the effort made by statisticians and data analyst over last 50 years in developing statistical programmes. Their efforts have resulted in the emergence of today’s technology. Early statistical packages such as SPSS or Minitab (from 1960’s) allowed to develop more advanced programmes having roots in mini computer era such as STATA or my favourite R which in turn allowed progress to advanced technology even further and create Python, Hadoop, SQL and so on. Becker and Chambers (with S) and later Ihaka, Gentleman, and members of the R Core team (with R) worked on developing the statistical software. These names should be convincing about how powerful statistical programming languages can be. Many operations that we can do in Hadoop or SQL we can also do easily in R.

Two data worlds: Predictive vs inferential statistics

So maybe Data Science is a creature merged by statisticians working on computer science department? Maybe there are two different approaches to statistics: mathematical statistics and computer science statistics and the computer science statisticians are data scientists because according to Yanir Seroussi in his blog:

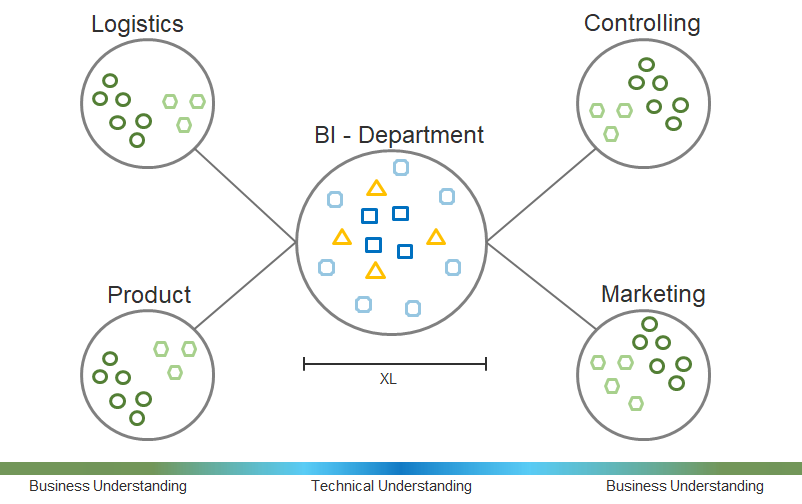

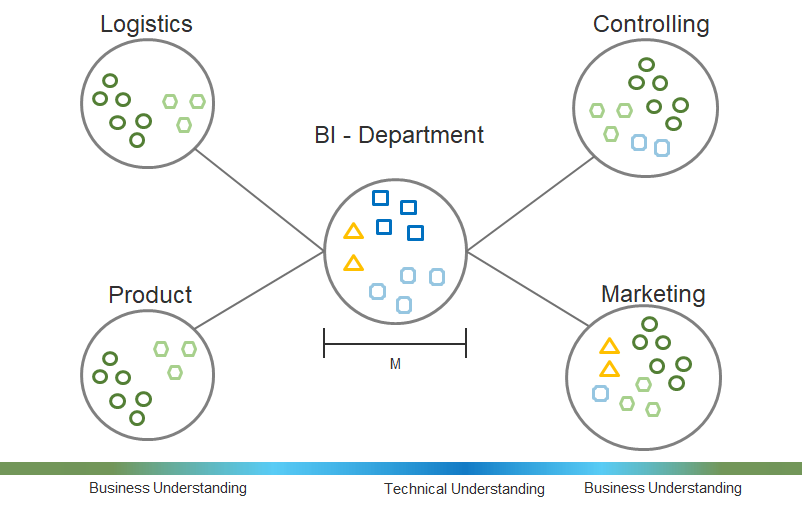

“A successful data scientist needs to be able to “become one with the data” by exploring it and applying rigorous statistical analysis (right-hand side of the continuum). But good data scientists also understand what it takes to deploy production systems, and are ready to get their hands dirty by writing code that cleans up the data or performs core system functionality (lefthand side of the continuum). Gaining all these skills takes time.”[4]

Okay, so my reasoning that some statisticians work on computer science department is right, as well as there exists subject like computational statistics, so maybe I should go for computer science department but study statistics.

In fact, I am not the first one to arrive at the conclusion. Everything started from a confession made by John Tukey in “The Future of Data Analysis” article published in “The Annals of Mathematical Statistics” :

For a long time, I have thought I was a statistician, interested in inferences from the particular to the general. But as I have watched mathematical statistics evolve, I have had cause to wonder and to doubt. … All in all I have come to feel that my central interest is in data analysis, which I take to include, among other things: procedures for analyzing data, techniques for interpreting the results of such procedures, ways of planning the gathering of data to make its analysis easier, more precise or more accurate, and all the machinery and results of (mathematical) statistics which apply to analyzing data

If I am right then above confession was a critical moment. The time when mathematical statistics become more inferential and computational statistics concentrated more on predictive statistics. Applied statisticians working on predictive analytics that are more interested in applying the knowledge rather than developing long proofs decided to move on computer science department.

Additionally, the following is crucial discussion made by Leo Biermann in his paper published in Statistical Science titled “Statistical modelling: the two cultures”. It enables us to understand and differentiate views from both types of statistician, namely mathematical and statistical.

Statistics starts with data. Think of the data as being generated by a black box in which a vector of input variables x (independent variables) go in one side, and on the other side the response variables y come out. Inside the black box, nature functions to associate the predictor variables with the response variables … There are two goals in analyzing the data:

- Prediction. To be able to predict what the responses are going to be to future input variables

- InferenceTo [infer] how nature is associating the response variables to the input variables.”

Furthermore, in the same dispute we can read:

“The statistical community has been committed to the almost exclusive use of [generative] models. This commitment has led to irrelevant theory, questionable conclusions, and has kept statisticians from working on a large range of interesting current problems. [Predictive] modeling, both in theory and practice, has developed rapidly in fields outside statistics. It can be used both on large complex data sets and as a more accurate and informative alternative to data modeling on smaller data sets. If our goal as a field is to use data to solve problems, then we need to move away from exclusive dependence on [generative] models …”

So, we can say that Data Science evolved from Predictive Analytics which in turn evolved from Statistics but it becomes separate science. Tukey and Wilk 1969 compared this new science to established sciences and further circumscribed the role of Statistics within it:

“ … data analysis is a very di cult field. It must adapt itself to what people can and need to do with data. In the sense that biology is more complex than physics, and the behavioural sciences are more complex than either, it is likely that the general problems of data analysis are more complex than those of all three. It is too much to ask for close and effective guidance for data analysis from any highly formalized structure, either now or in the near future. Data analysis can gain much from formal statistics, but only if the connection is kept adequately loose”

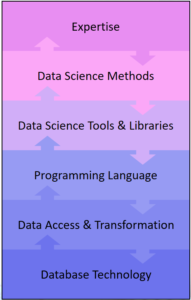

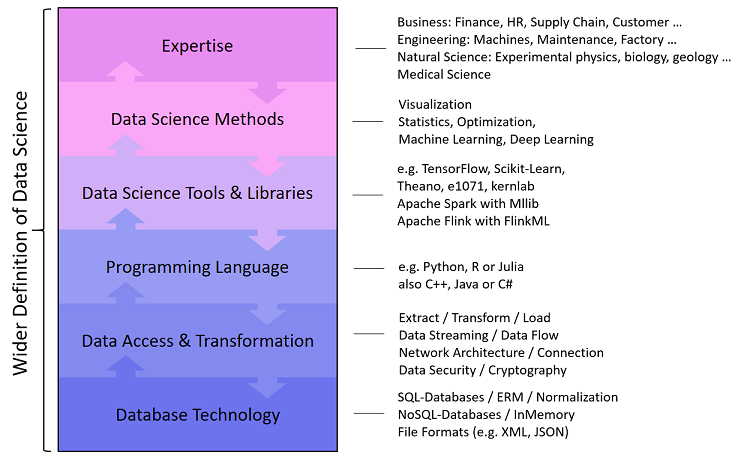

How to study data science

So, what is exactly predictive analytics culture? I think that everyone who used Kaggle competition before can agree with me that description of common task framework (CTF) formulated by Marc Liberman in 2009 is a perfect description of Kaggle competitions, and hackathons events; where latter has worked as training sessions for newbies in the data world. An instance of the CTF has these ingredients:

- A publicly available training data set involving, for each observation, a list of (possibly many) feature measurements, and a class label for that observation.

- A set of enrolled competitors whose common task is to infer a class prediction rule from the training data.

- A scoring referee, to which competitors can submit their prediction rule. The referee runs the prediction rule against a testing dataset which is sequestered behind a Chinese wall. The referee objectively and automatically reports the score (prediction accuracy) achieved by the submitted rule

Kaggle competitions are not only training platforms for newbies like me but also very challenging statistical competitions where experienced statisticians can win “pocket money”. A famous example is the Netflix Challenge where the common task was to predict Netflix user movie selection. The winning team (which included ATT Statistician Bob Bell) won 1 mln dollars.

Comparing modules that are available on master in data science at University of Berkley[6]:

- Both

- Applied machine learning

- Experiments and causality

- Statistics

- Research design and application for data and analysis

- Statistics for Data Science

- Behind the data: humans and values

- Statistical methods for discrete response, Time Series and panel data

- Data visualisation

- Computer Science

- Python for Data Science

- Storing and Retrieving Data

- Scalling up! Really Big Data

- Machine Learning at scale

- Natural Language Processing with Deep Learning

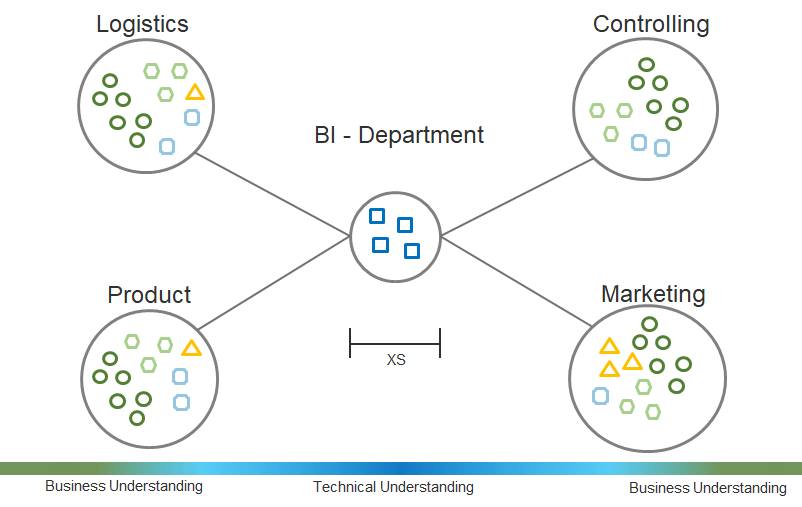

We can really see that data science is a subject that demands skills from both computer science and statistics. So, it is another confirmation for me that it is the best time to change department for my postgraduate study, that is, to study statistics on computer science department.

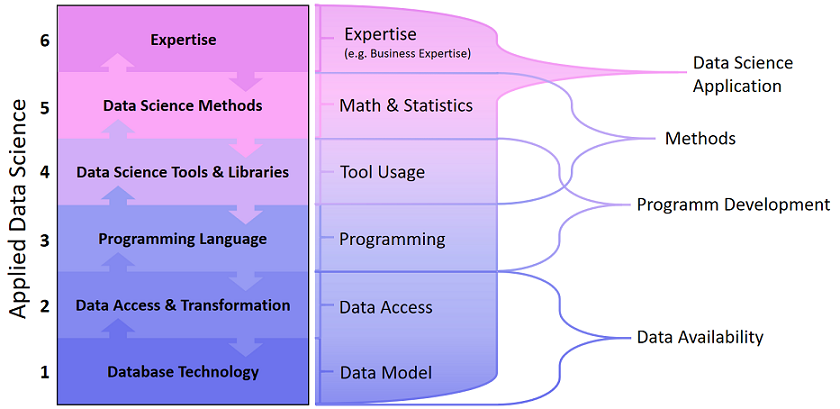

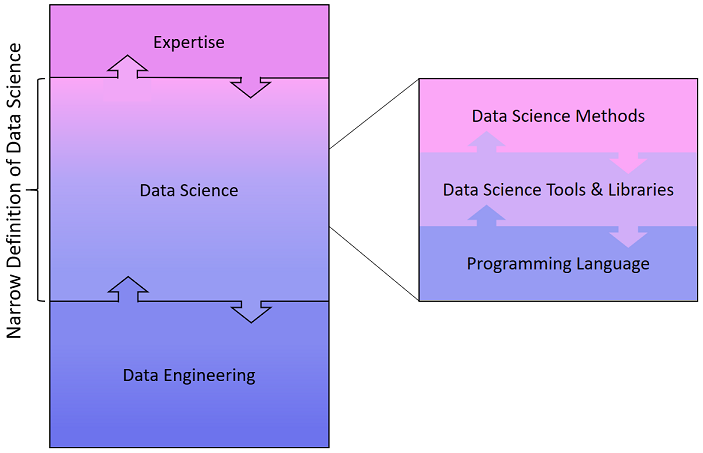

In the 50 Years of Data Science article we can read: “The activities of Greater Data Science are classified into 6 divisions:

- Data exploration and preparation

- Data representation and transformation

- Computing with data

- Data visualization and presentation

- Data Modelling

- Science about data science [5]

I will quickly go through all of them using my Ebola research example, this required using machine learning on time series data.

- The most demanding part. Many people told me before starting this project that: collecting, cleaning, wrangling and preparing data take 60% of all the time that you need to spend on data science project. I didn’t realise how much this 60% means in real time. I didn ‘t realise that the 60 percent will take so much time and that after this I will be exhausted. Exhausted but ready for the next step.

- This point is actually part of the first one, or maybe just like many other things in statistics: everything is one huge connected bunch.Data that you can find can be very nice, well behaving, written in CSV or JSON or any other format file that you can quickly download and use, but what if not? What if your data is ‘dirty’and not stored as a file (e.g. only appear on a website)? What if data is coded? Do you need to decode it?

- The even bigger challenge, but what a fun? You need to know a few different programming languages or least as I do know a little bit of R, a little bit of Python, quite well Tableau and Excel. So you can use different program in different scenarios or for different tasks. For example, using Panda to do EDA and ggplot 2 to do data vis.

- Graphs are pretty, right? If you are still reading my article, I bet you know what is heat map, spatial vis in big cities or different infographics. Surely, I would like to highlight, that we respect only the ones that are not only pretty but also valid. Nevertheless, time that is required to create these visualisations is another matter.

- The data modelling, finally? I don’t need to say a lot about this. All forms of inferential and predictive analytic are allowed and accepted.

- My favourite part, not the end yet. All the conferences and meetups that I can attend on. All the seminars where we all present our current projects.

Conclusions

After graduation, I will be graduated Statistician. Even more, I will be a mathematical statistician whom mostly during degree dealt with inferential statistics. On the other hand, winning data science scholarship gave me exposure to predictive analytic which I highly enjoyed. Therefore, for my next stage, I will just change my department and concentrate more on predictive analytic. There are many statisticians working on computer science department. They possess both statistical knowledge and advanced software engineering skills, they are called data scientists. It would be a pleasure for me to join them. I don’t mind if it will be MSc. Computer Science, MSc. Data Science, MSc. Big Data or whatever the name will be. I do mind to have sufficient exposure to deal with “dirty” data using statistical modelling and machine learning using modern technology. This is what data science is for me. Maybe for you, it will be something else. Maybe you will be more satisfied with expanding massively programming skills. But for me, programming is a tool, modern technology is my friend and my bread and butter will be predictive analytic.

References

- IMS Presidential Address: Let us own data science

- Data science is statistics

- A Gelman, Columbia University

- Yanir Seroussi: What is data Science?

- 50 Years Data Science

- Curriculum: data science@Berkley